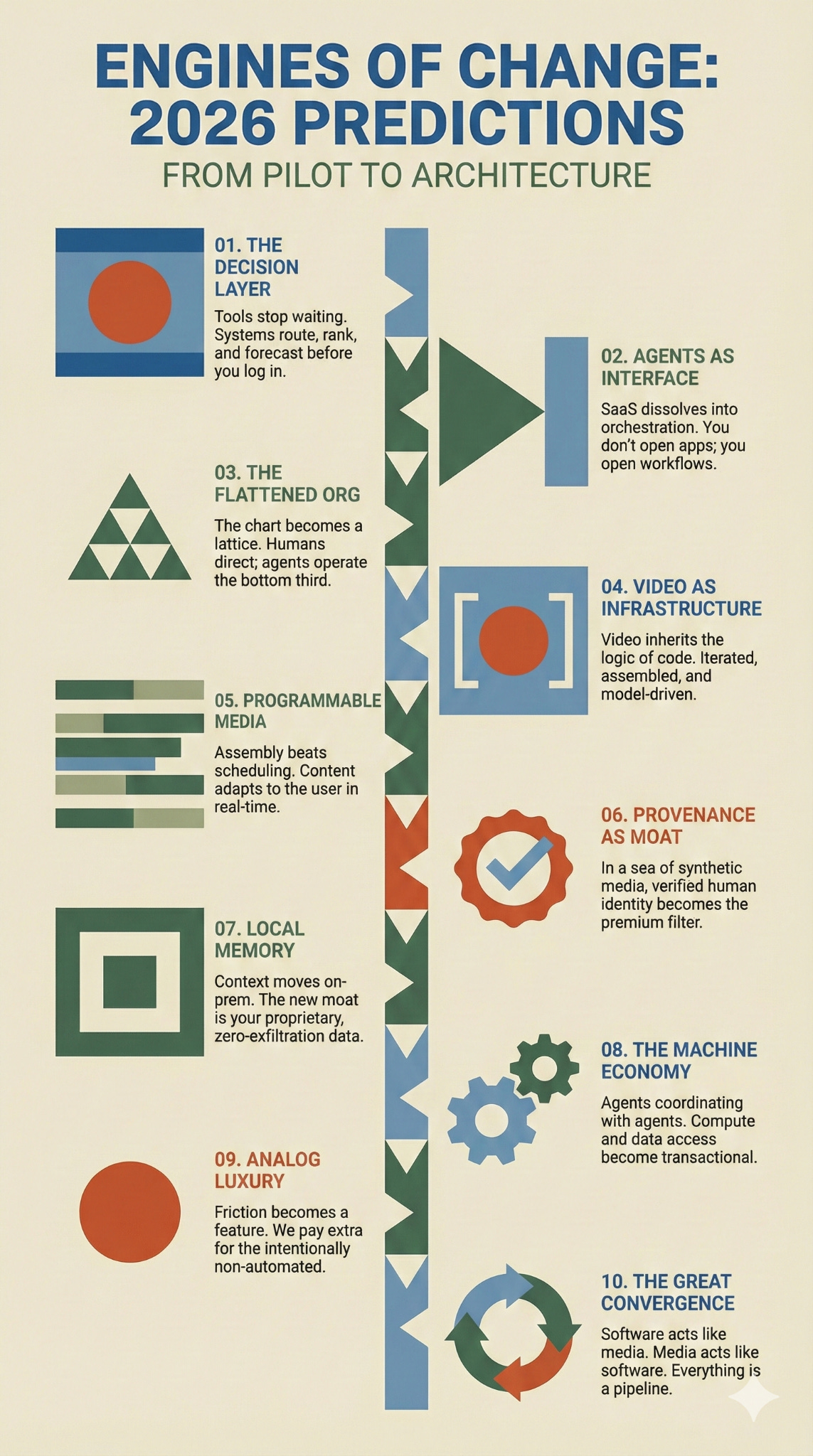

AI + Media: 2026 Predictions

From pilot to architecture

It’s prediction season once again. Everyone’s doing the annual ritual where we squint at a trendline and declare the future in 10 bullet points. Some of it is thoughtful and a lot of it is just content.

I don’t want to add to the noise, I want to offer my own opinions on what really is the right signals. And so here’s the lens I’m using for 2026: I’m not trying to predict the next model. I’m watching where the work moves.

AI is interesting, but as we have seen, it’s not actually changing the business. The thing that changes a business is where decisions get made, when they get made, and who gets to override them. That’s the real shift. Not the demo, not the prompt, not the benchmark. The operating behavior.

That’s why most of these predictions are not about new capabilities. They’re about defaults. About the parts of the stack that become mandatory. About the places where pilots stop being experiments and start being architecture.

2025 was the year everybody tried something. A few pilots …