AI Vertically Integrated

When and Where Control Moves Across the Stack

In past technology cycles, we witnessed cleaner, slower evolution. Disruption would crest. Markets would stabilize. Infrastructure would follow. Consolidation came later.

AI threw those rules out the window. Disruption, innovation, and adoption are happening at the same time. Entire workflows are being reconfigured while capital floods in and regulatory conversations accelerate. We are even seeing early signals of a future contraction forming at the edges, even as new areas of expansion open up.

For the past two years, most coverage has focused on model capability. Larger context windows. Faster inference. Better outputs. The narrative has been horizontal expansion. More features. More tools. More experiments layered onto existing workflows.

That phase is maturing. What is emerging now is vertical alignment. Simulation, production, governance, monetization. Layers that once operated independently are beginning to concentrate inside the same ecosystems. What looked modular is becoming integrated.

This week’s stories are not breakthroughs. They are signals that control is migrating across adjacent layers of the stack.

Runway scales the rehearsal layer

Source: The Verge

Runway has reached a reported $5.3 billion valuation, fueled by its work on what it calls “world models.” The attention naturally goes to video generation. The more important move is upstream.

World models simulate environments before physical production begins. Lighting. Camera movement. Spatial continuity. Narrative pacing. Pre-production becomes programmable. Teams can test ideas before crews assemble and budgets lock. That changes the economics of risk.

But the relevance does not stop at media. World simulation is broadly applicable anywhere physical systems operate under uncertainty. Robotics, autonomous vehicles, warehouse automation, defense systems. If you can simulate edge cases before machines encounter them in the real world, you compress failure into rehearsal instead of deployment. Training becomes safer. Iteration becomes faster. Risk becomes cheaper to explore.

In that context, “video generation” is a narrow framing. Simulation is the product.

When uncertainty can be reduced earlier, more projects become financeable. When iteration becomes cheaper before production, creative authority shifts forward. The rehearsal space becomes computational infrastructure.

Why it matters

Studios do not struggle because imagination is scarce. They struggle because risk compounds. The same is true in robotics and automation. Simulation is becoming a financial instrument as much as a creative one. It reduces unknowns before capital, hardware, or people are committed. The company that owns the sandbox where decisions are rehearsed does not just influence aesthetics. It influences feasibility and feasibility is leverage.

Amazon compresses the production pipeline

Source: Reuters

Amazon is building internal AI systems inside Amazon MGM Studios to streamline scheduling, budgeting, coordination, and post-production workflows.

This is framed as a timeline compression, not a creative replacement. Studios stall because coordination is expensive. If the path from greenlight to delivery shortens, iteration increases. Fewer delays. Fewer downstream surprises. More throughput per dollar.

But efficiency is only the first-order effect. A context-aware production system creates longer-term structural value. If assets, scenes, and story elements are generated and tracked inside a programmable pipeline, they become adaptable. The same story can be reassembled for different environments and viewing conditions.

We already produce different edits for broadcast, airline, and theatrical release. A context-aware system extends that logic. One version optimized for a phone during a commute. Another for a living room screen at night. Lighting, pacing, framing, even narrative emphasis could adjust based on device, bandwidth, or behavioral context.

Taken further, advertising and brand placement become programmable as well. A cereal box on a breakfast table could match the packaging used in your region rather than another market. Product placement stops being static and becomes versioned.

Compression enables iteration. Context enables variation. Amazon is not just a studio. It owns AWS. It controls compute, tooling, and distribution endpoints. Production acceleration sits inside a broader infrastructure footprint. If the same company manages asset generation, pipeline orchestration, and distribution telemetry, contextual variation is not speculative. It is operational.

Why it matters

Speed becomes structural when it embeds in infrastructure. But so does adaptability. Once pipeline acceleration and contextual recomposition are system-level capabilities rather than bespoke editorial decisions, they create durable advantage. Stories stop being single artifacts and become flexible assemblies.

Competitors either build comparable programmable pipelines or operate inside someone else’s. And whoever owns the pipeline owns the variation.

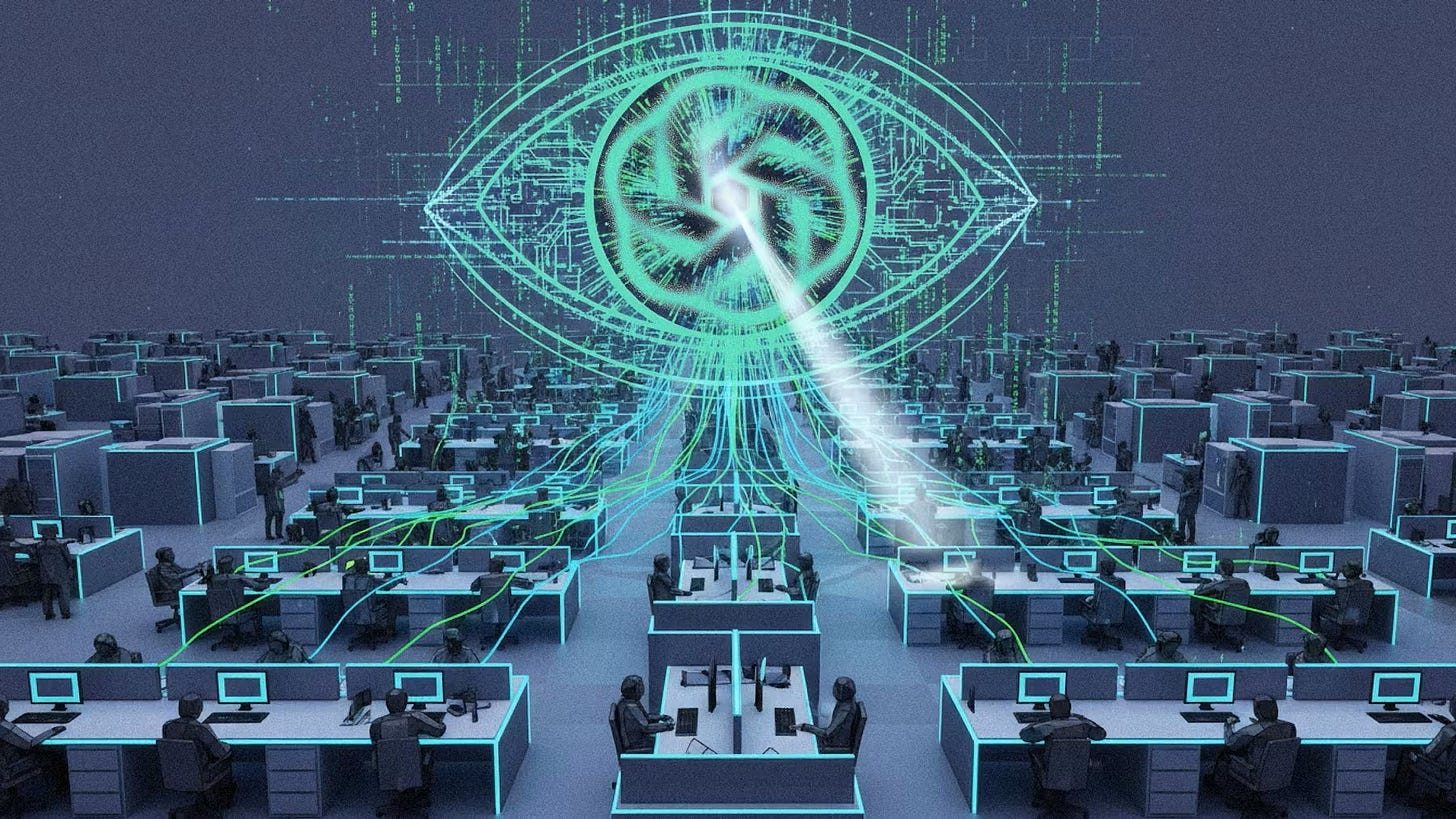

OpenAI applies models to internal enforcement

Source: The Information

Reports indicate OpenAI has used an internal version of ChatGPT to analyze potential leaks by mapping document access and communication pathways across the organization. This is not a public feature. It is internal governance powered by model-searchable memory.

Large language models are effective at reconstructing narratives from fragments. Emails, document revisions, chat logs, access permissions. When paired with document logs and access graphs, they become forensic systems. They do not simply summarize content. They identify patterns. They connect dots that would otherwise require weeks of manual investigation.

When paired with a sophisticated internal search graph, these models can correlate conversations, document access, and revision histories across the organization. Layer in external information streams, public reporting, social chatter, market signals, and you begin to see a broader analytical surface emerge. Internal discussion can be compared against what later appears outside the company. Language patterns can be tracked. Access paths can be mapped. Timing can be analyzed.

In practical terms, an organization’s informational history becomes not just searchable, but model-interpretable. Who had access to what. When changes were made. How language traveled. The model does not need certainty to be useful. It narrows the field, proposes plausible chains, and surface anomalies.

Over time, systems like this may move beyond identifying leaks after the fact. They may begin to flag risk patterns before information leaves the building. Not prediction in the science fiction sense, but probabilistic alerts based on behavior, language shifts, and unusual access sequences.

At that point, AI is no longer simply reconstructing events. It is shaping internal risk posture in advance.

Why it matters

When organizational memory becomes model-interpretable, governance stops being episodic and becomes continuous.

Historically, enforcement inside companies has been reactive. An incident occurs. An investigation begins. Logs are reviewed. Interviews are conducted. Conclusions are drawn after the fact. AI compresses that cycle. It lowers the cost of surveillance, reconstruction, and anomaly detection to near zero. What was once exceptional becomes ambient.

That changes the structure of the institution. Transparency is no longer dependent on policy documents or managerial oversight alone. It becomes infrastructural. If information flow can be reconstructed and correlated at scale, informal boundaries tighten automatically. The knowledge that archives are machine-readable shapes behavior long before enforcement is invoked.

The more significant shift is not leak detection. It is predictive risk management. Once models begin flagging patterns before information leaves the building, governance moves from investigation to preemption. Organizations do not simply respond to events. They shape them in advance.

AI, in this context, is not a productivity layer. It is an institutional layer. The same systems that generate content, assist customers, and mediate public interaction can also define internal norms. The stack is not only expanding outward into markets. It is consolidating inward around control.

That dual movement matters because it concentrates authority across layers that were previously distinct. External interface and internal governance begin to share the same computational substrate. And once governance runs on the same models that power the product, power is no longer modular. It is integrated.

Advertising moves into conversational space

Source: Los Angeles Times

Super Bowl messaging made the tension explicit. Anthropic positioned itself against advertising inside chat, while OpenAI experiments with monetization embedded in conversational interfaces.

The economic pressure is straightforward. Compute scales with usage. Subscriptions may not indefinitely absorb that cost, especially as models become more capable and more expensive to run. Advertising is the familiar lever.

But conversational systems are not display environments. They do not resemble feeds or search result pages. They feel like assistants. They respond in complete sentences. They explain reasoning. They guide decisions.

That difference matters. In search, advertising sits adjacent to results. In conversation, advertising risks blending with the answer itself. Even if clearly labeled, the surrounding narrative frame shapes perception. When a system recommends a product, a service, or a destination, the distinction between organic suggestion and paid placement becomes more complex.

Monetization in this environment is not about selling impressions. It is about influencing how recommendations are constructed.

Why it matters

If conversational systems become the primary discovery interface for search, commerce, travel, health, or entertainment, advertising will not sit on the margins. It will sit inside the decision pathway.

The battleground shifts from attention to framing. Brands will not simply compete for visibility. They will compete for inclusion in generated responses. Platforms will not just allocate ad slots. They will tune how suggestions are composed, ranked, and explained. The economics of influence move from placement to participation in the answer.

This has second-order effects. Trust becomes a strategic asset. Disclosure standards matter more. User perception of neutrality becomes part of the product itself. A system that feels compromised risks losing authority.

In this sense, monetization is not a downstream tweak. It is an architectural decision. It determines whether the conversational layer functions as advisor, marketplace, or something in between. And whoever controls that layer controls the recommendation moment.

Closing Note

What connects these developments is not simply growth. It is consolidation across layers that used to be governed separately.

Runway turns rehearsal into programmable simulation, extending beyond media into robotics and physical systems. Amazon compresses execution while building a context-aware pipeline that can potentially version stories and advertising dynamically. OpenAI applies models to internal governance, shifting enforcement from episodic investigation to continuous oversight. Advertising moves into conversational interfaces, embedding monetization inside the recommendation pathway itself.

Each move is defensible on its own. Together, they describe vertical integration. This is not the traditional media model of owning production, distribution, and exhibition. It is a new configuration. Simulation defines what is feasible. Production systems define what is practical. Internal governance defines what is permissible. Conversational interfaces define what is surfaced and monetized. When those layers sit inside the same ecosystem, leverage compounds.

The most important shift is not capability. It is coordination. When the same computational substrate powers creative rehearsal, pipeline orchestration, institutional oversight, and consumer recommendation, then boundaries blur. The company that controls the rehearsal space influences feasibility. The company that controls the pipeline influences variation. The company that controls internal graphs influences behavior. The company that controls the conversational layer influences choice.

Power becomes integrated rather than modular. This does not imply collapse or inevitability. Markets remain competitive. Regulation remains active. New entrants will emerge. But the direction is clear. The seams between layers are softening, and the actors who move vertically will define norms before others realize those norms have hardened.