BuffConf 2025: AI at the Edge, Video in Real Time

Where GenAI, monetization, and metadata are reshaping video infrastructure

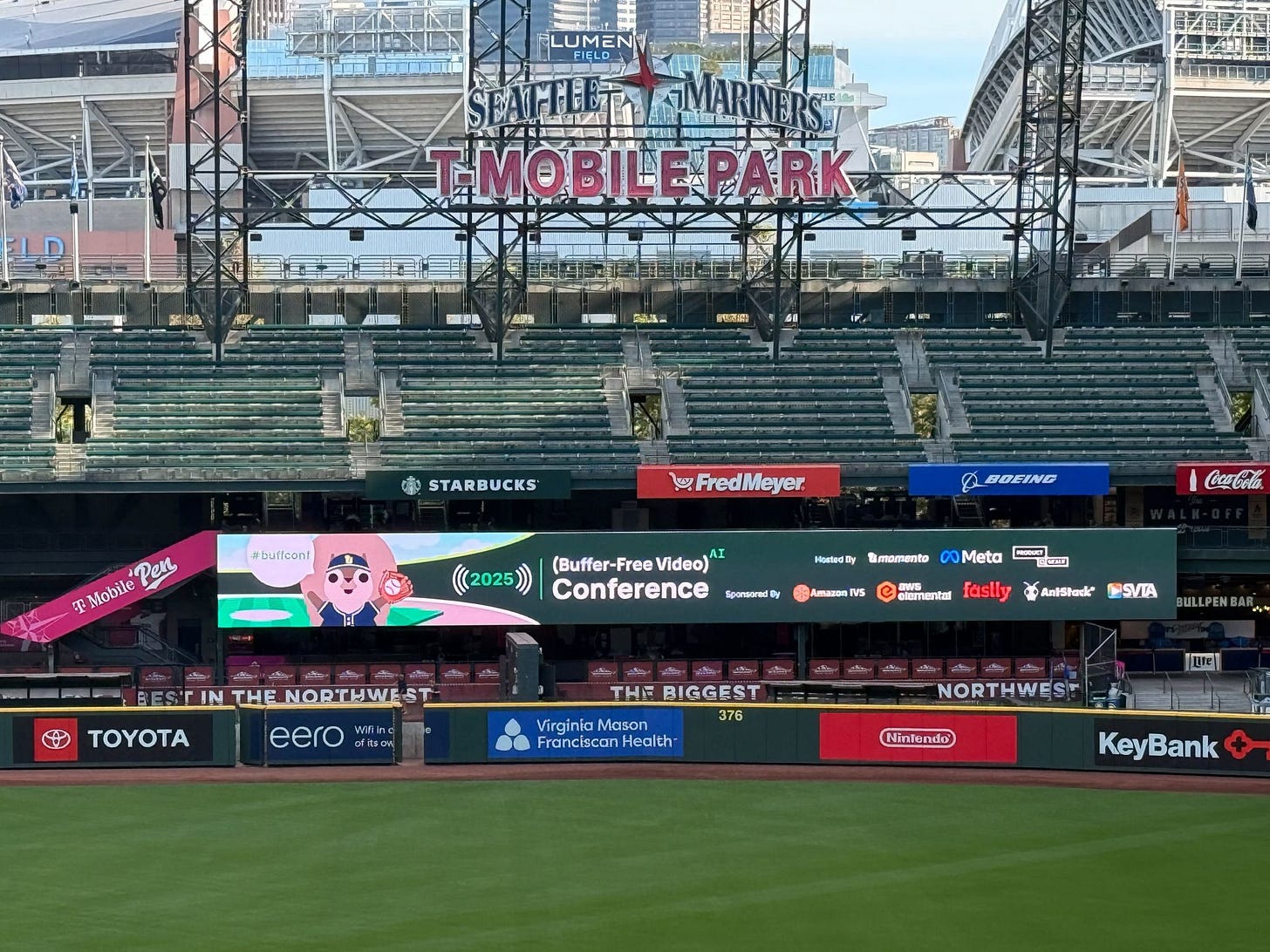

This week I joined a few hundred engineers and architects at BuffConf, a new technical summit hosted at T-Mobile Park and SURF Incubator in Seattle by Momento, along with multiple sponsors. This wasn’t a vision fest or a “what if” retreat. It was a pragmatic, detailed look at what’s already working (and sometimes breaking) across the video stack as media goes real-time, AI-native, and monetizable at global scale.

Years ago, when I was deep in the weeds building encoders, I started thinking of myself as a media plumber, laying new pipes to move the water through the system. BuffConf was full of media plumbers. These are the folks who understand that getting video to work at scale isn’t about prompts or pixels. It’s about flow, pushing bits through real pipes with real constraints. I always seem to return to this metaphor because without great plumbing, the whole thing backs up, leaks, or fails in ways the audience never sees but always feels.