Signals of Shame, Signals of Change

The GPT Purity Test

I was recently added to a WhatsApp group full of friends and former colleagues. A lot of folks in there are in the middle of job searches, so the group was set up as a way to share leads, offer support, and help each other out. By the way, reach out to me if you‘d like to be added to the group, They’re a great bunch of folks and I’m sure you’ll enjoy meeting them.

One of the first things people did was introduce themselves. And that’s where something odd started to happen.

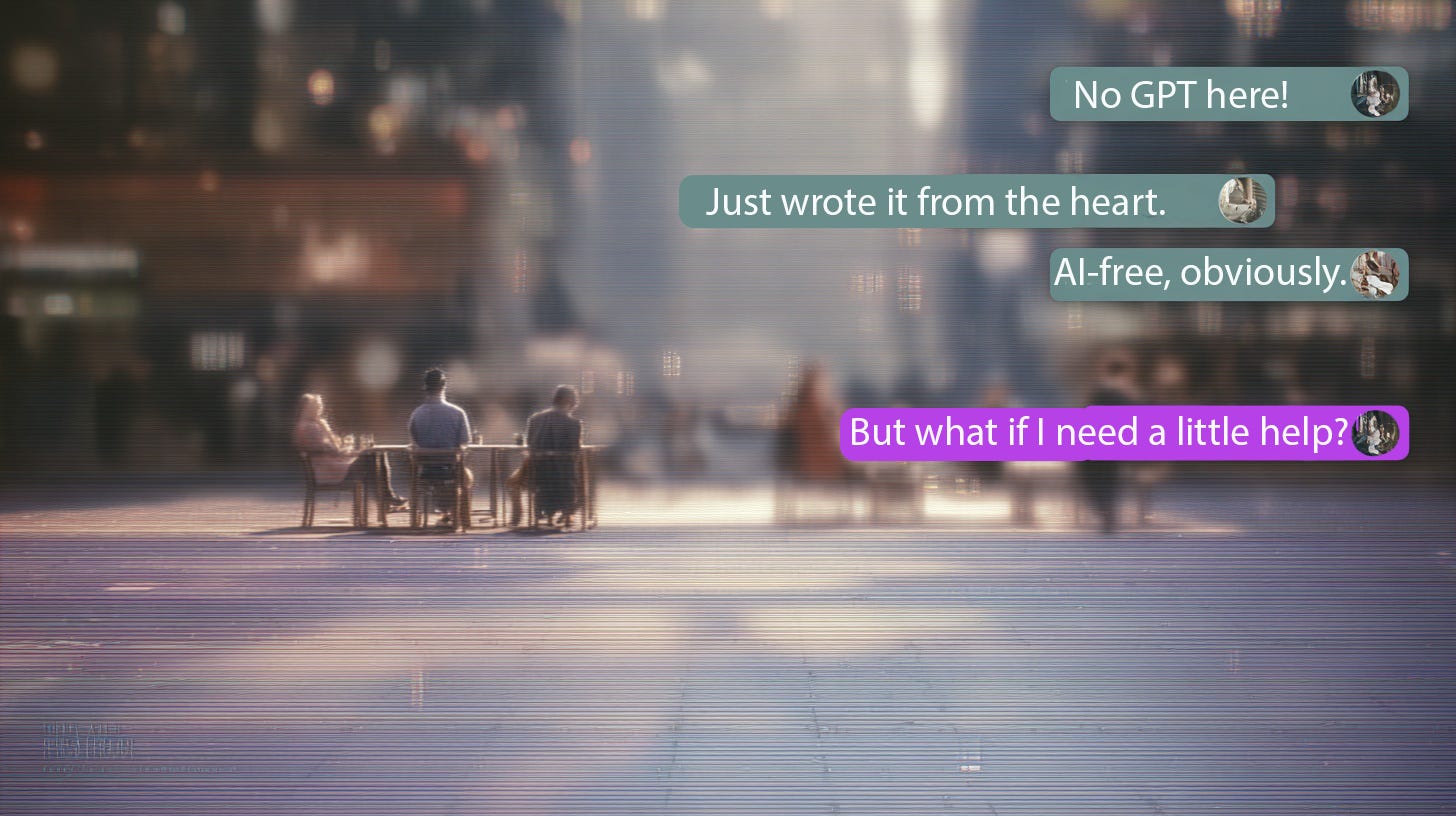

Some intros were clearly polished; thoughtful, well-written, personal. A few mentioned that they used GPT to help shape what they wrote. Then came a string of replies that made a point of saying the opposite. “No GPT here.” “Wrote this on my own.”

I had already introduced myself by the time this all happened and I’ve not quite been able to articulate why I found this conversation disappointing. Not because I don’t know what to say, but because I’ve been sitting with the weirdness of that moment. It wasn’t about who u…