The Collapse Layer

Deep Cut #4 in the Radicalization Series

When Defaults Break

There’s a moment when a system built to glide suddenly doesn’t, not because the technology fails. Because the world stops accepting its answers.

A newsroom freezes an AI feature after a “helpful” summary misstates the facts and nobody can explain how it happened. A creator community realizes their work has vanished from feeds, not removed, just de-ranked into irrelevance, and the screenshots start circulating. A procurement review stalls when auditors ask for logs the vendor can’t provide. A model update ships, causing a workflow to break overnight, and the explanation only comes after the damage is done.

This is not ideology colliding with ideology. It’s defaults colliding with reality.

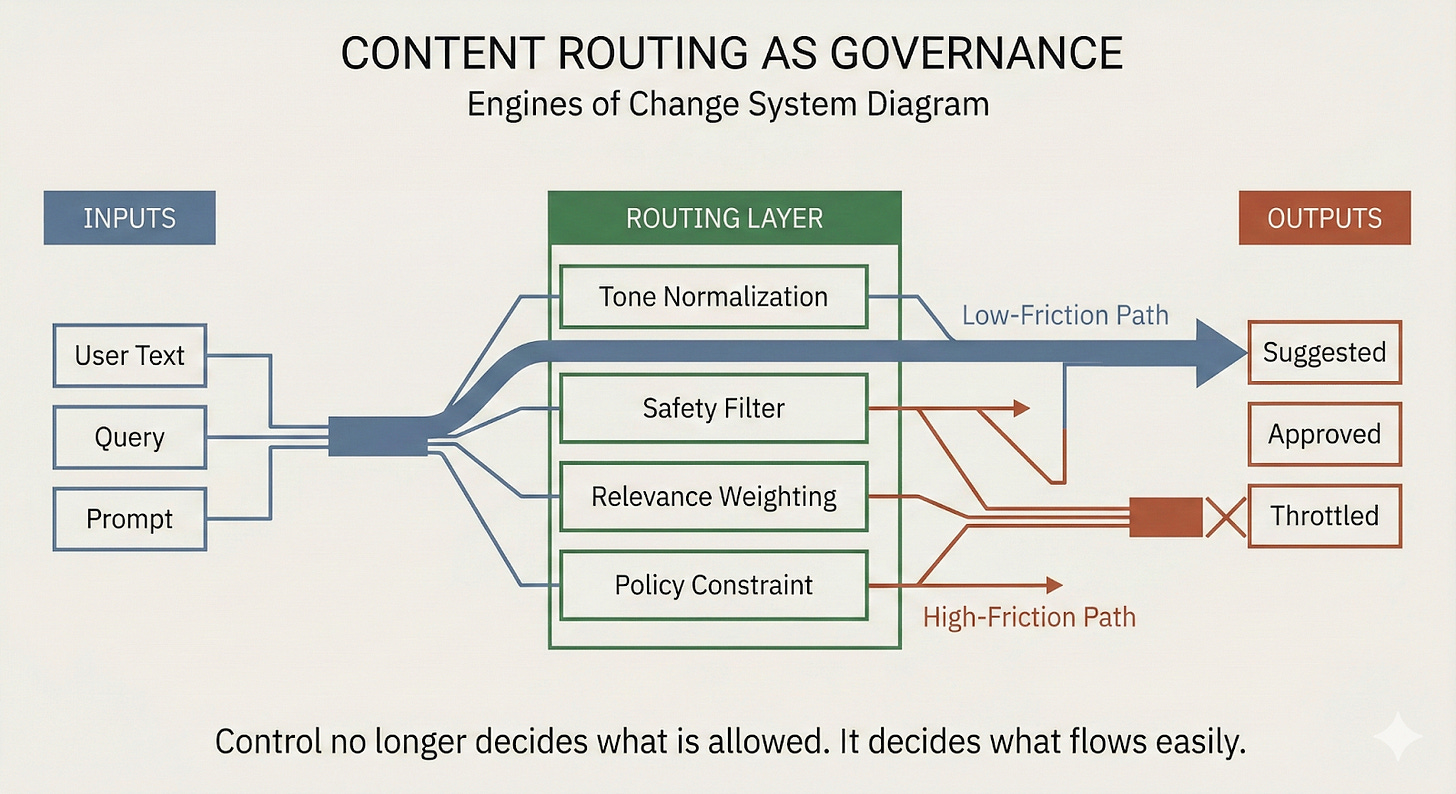

For a long time, these systems worked because they smoothed things over. They reduced friction. They resolved ambiguity. They made decisions that felt automatic, reasonable, inevitable. But smoothing only works until it encounters resistance it can’t absorb. When that happens, the system has to explain itself. And that’s the moment it’s least prepared for.

Collapse isn’t the end of the system. It’s the end of the illusion that the system is neutral.

This is the phase where questions stop being deflected by UX. Where “that’s just how it works” stops satisfying anyone. Where the values embedded in the defaults are dragged into the open and treated like what they are: choices made by people, enforced by machines, scaled without consent.

My first three essays mapped how we got here. This one is about what happens next.

Because once resistance shows up, the system doesn’t get to hide behind optimization anymore. It has to answer for what it’s been shaping all along.