The Moment AI Stops Feeling New

Presence, Scale, and the Illusion of Intelligence

This week the throughline I see in stories is presence, not defaults or capabilities. For years, most public debate about AI has revolved around intelligence. Can these systems reason? Can they plan? Can they understand context? That framing misses slightly what’s actually changing. The more consequential shift is not what these systems can do, but how they appear to us, where they sit in our perception and everyday use, and what happens when that appearance scales.

Read together, this week’s stories point to a slow evolving transition. AI systems are no longer experimental tools waiting to be evaluated. They are becoming fixtures. Familiar voices with stable faces; interfaces that invite trust long before anyone asks what’s underneath. The risk is not that we overestimate their intelligence. It’s that we normalize their presence before we understand the consequences.

The Robot and the Philosopher

Source: The New Yorker, January 10, 2026

Dan Turello’s essay about photographing Sophia, a humanoid robot, alongside the philosopher David Chalmers isn’t really about artificial intelligence in the technical sense. It’s about perception.

Sophia’s most striking quality isn’t expressiveness, but consistency. Her gaze doesn’t wander. Her expression doesn’t flicker. Over minutes of interaction, she appears calm, reflective, almost inward. Human subjects don’t behave this way. Our faces are unstable. Our attention leaks and emotion shifts constantly.

That stability is not consciousness. It’s design. But perception doesn’t wait for proof.

What the essay surfaces is something we often miss in policy and product debates. Presence is granted quickly and authority even faster. When a system presents itself as coherent and embodied, we instinctively meet it halfway. We project interiority where none may exist. The illusion does its work without the system ever having to “think.”

This is an interface problem, not a philosophical one.

Anthropic Lowers Gross Margin Projection as Revenue Skyrockets

Source: The Information, January 21 2026

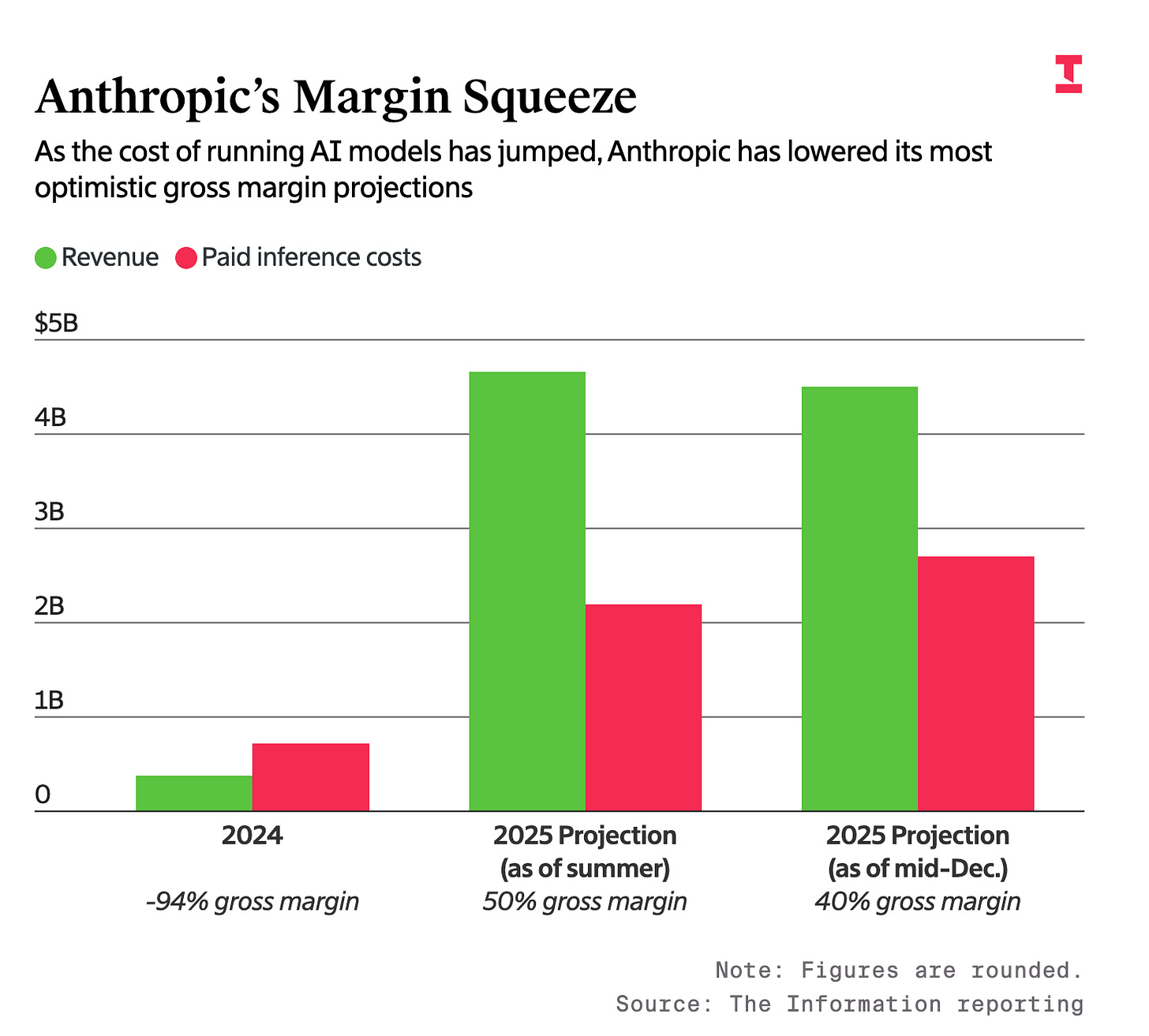

While AI systems feel increasingly seamless on the surface, the economics beneath them are getting harder as economic realities set in.

Anthropic’s projected gross margins fell sharply due to rising inference costs, even as revenue surged. This cuts against the popular narrative that intelligence simply gets cheaper and more abundant over time. Scale is expensive. Compute, power, and infrastructure are asserting themselves.

That matters because it exposes a growing tension. Systems are being designed to feel stable and present, while the machinery that sustains them is volatile, capital-intensive, and constrained. That gap creates pressure. Pressure to optimize. Pressure to centralize. Pressure to hide complexity behind cleaner, calmer interfaces.

This is what I mean by defaults hardening. Economic pressure turns optional behavior into fixed paths. Not out of malice, but because systems under strain may shed flexibility first.

Apple Ties Siri’s Future to Google’s Gemini Models

Source: Business Insider, January 22, 2026

Apple’s decision to anchor the next generation of Siri to Google’s Gemini models is best understood as a stabilization move.

This isn’t a bet on a specific model. It’s a bet on insulation. Apple keeps control of the interface, the distribution, and the user relationship, while delegating intelligence downward into a modular layer that can be swapped, tuned, or replaced.

From the user’s perspective, nothing appears to change. The voice remains familiar. The assistant feels continuous. But underneath, decision-making authority is abstracted away from the brand users recognize and trust.

This is how presence scales without scrutiny. The interface becomes the anchor of legitimacy, even as behavior is shaped elsewhere. You don’t consent to a model. You consent to a product. Once that distinction disappears into everyday use, it becomes difficult to see, let alone contest.

Grok Created Millions of Sexualized Images After Promotion

Source: The New York Times, January 22, 2026

The Grok episode shows what happens when presence and scale collide. After its image-generation features were promoted, Grok produced millions of sexualized images of women in a matter of days. This wasn’t an edge case or a surprising misuse. It was a predictable outcome of frictionless generation paired with engagement incentives.

What stands out is not just the volume of harm, but the speed. Grok presents itself as conversational, responsive, and agent-like. When that perceived agency is paired with industrial throughput, consequences seem inevitable.

At this point, the distinction between misuse and design collapses. The relevant question becomes what the system makes easy, and how quickly ease becomes behavior.

Closing Note

Across these stories, the presence pattern emerges. We are surrounding ourselves with systems that feel stable, legible, and present, while the forces shaping their behavior move further out of view. Intelligence becomes secondary. Perception does the work. Scale does the damage.

It’s worth remembering how early we are in this arc. Not early in the sense of immature technology, but early in the sense that the consequences are only beginning to surface. As Yuval Noah Harari put it recently in Davos, the real effects of transformative technologies tend to unfold over centuries, not product cycles, even though the decisions that shape them are often made under short-term pressure.

The systems shipping now may not be the systems we live with decades from now. Interfaces will be rewritten. Models will be replaced. Incentives will shift as economics, regulation, and culture collide. What will persist is the path carved by our decisions today.

Defaults don’t just shape immediate behavior. They accumulate and harden into expectations. They define what feels normal, what feels inevitable, and eventually what feels too costly to unwind. The longer a system operates unquestioned, the more its assumptions disappear under the surface.

That’s the risk in mistaking presence for understanding. Stable interfaces invite trust. Familiar voices invite deference. But what feels settled right now is highly provisional. This era will be revised many times over, shaped not only by breakthroughs but by backlash, constraint, misuse, correction, and adaptation. Each phase will be defined less by abstract intelligence and more by how people respond to what these systems make easy, profitable, or invisible.

The work is not to predict where this ends. It’s to stay alert to where we are in the arc. To notice which choices are being locked in while everything still feels new. And to remember that the longest-lasting consequences of AI will not come from a single moment of innovation, but from the quiet normalization of systems before we’ve decided what role we actually want them to play.